We know security matters, and that includes being able to control your social media accounts and protect yourself from scams. That’s why we’re testing the use of facial recognition technology to help protect people from celeb-bait ads and enable faster account recovery. We hope that by sharing our approach, we can help inform our industry’s defenses against online scammers.

Protecting People from Celeb-Bait Ads and Impersonation

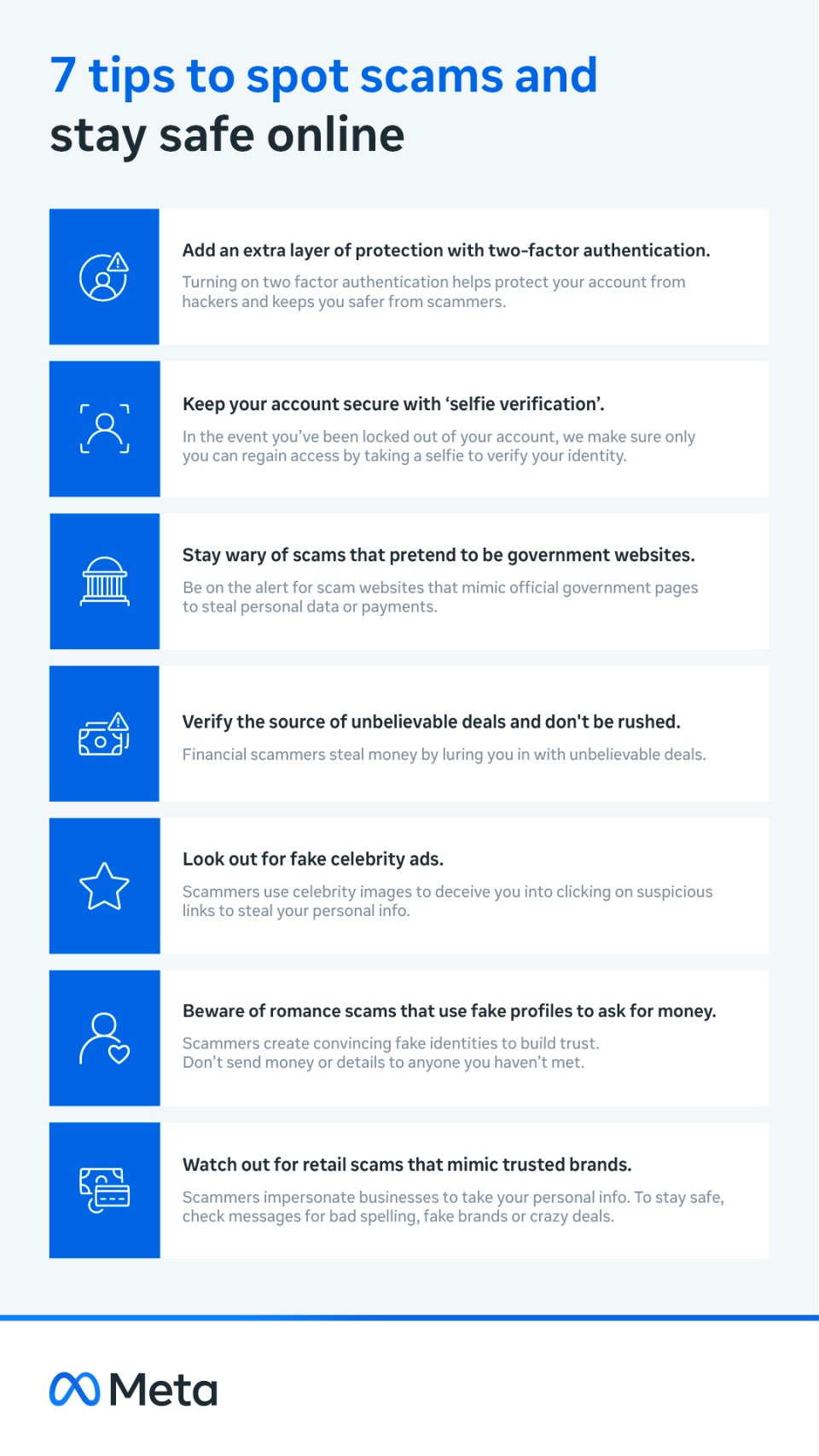

Scammers often try to use images of public figures, such as content creators or celebrities, to bait people into engaging with ads that lead to scam websites, where they are asked to share personal information or send money. This scheme, commonly called “celeb-bait,” violates our policies and is bad for people that use our products.

Of course, celebrities are featured in many legitimate ads. But because celeb-bait ads are designed to look real, they’re not always easy to detect. Our ad review system relies primarily on automated technology to review the millions of ads that are run across Meta platforms every day. We use machine learning classifiers to review every ad that runs on our platforms for violations of our ad policies, including scams. This automated process includes analysis of the different components of an ad, such as the text, image or video.

Now, we’re testing a new way of detecting celeb-bait scams. If our systems suspect that an ad may be a scam that contains the image of a public figure at risk for celeb-bait, we will try to use facial recognition technology to compare faces in the ad to the public figure’s Facebook and Instagram profile pictures. If we confirm a match and determine the ad is a scam, we’ll block it. We immediately delete any facial data generated from ads for this one-time comparison, regardless of whether our system finds a match, and we don’t use it for any other purpose.

Early testing with a small group of celebrities and public figures shows promising results in increasing the speed and efficacy with which we can detect and enforce against this type of scam. In the coming weeks, we’ll start showing in-app notifications to a larger group of public figures who’ve been impacted by celeb-bait letting them know we’re enrolling them in this protection. Public figures enrolled in this protection can opt-out in their Accounts Center anytime.

We’ve also seen scammers impersonate public figures by creating impostor accounts, with the goal of duping people into engaging with scam content or sending money. For example, scammers may claim that a celebrity has endorsed a specific investment offering or ask for sensitive personal information in exchange for a free giveaway. We currently use detection systems and user reports to help identify potential impersonators. We’re exploring adding another step to help find this kind of fake account faster. This added layer of defense would work by using facial recognition technology to compare profile pictures on the suspicious account to a public figure’s Facebook and Instagram profile pictures. We hope to test this and other new approaches soon.

Helping People Regain Access to Their Accounts

People can lose access to their Facebook or Instagram accounts when they forget their password, lose their device or are tricked into turning their password over to a scammer. If we think an account has been compromised, we require the account holder to verify their identity before regaining access by uploading an official ID or an official certificate that includes their name.

We’re now testing video selfies as a means for people to verify their identity and regain access to compromised accounts. The user will upload a video selfie and we’ll use facial recognition technology to compare the selfie to the profile pictures on the account they’re trying to access. This is similar to identity verification tools you might already use to unlock your phone or access other apps.

As soon as someone uploads a video selfie, it will be encrypted and stored securely. It will never be visible on their profile, to friends or to other people on Facebook or Instagram. We immediately delete any facial data generated after this comparison regardless of whether there’s a match or not.

Video selfie verification expands on the options for people to regain account access, only takes a minute to complete and is the easiest way for people to verify their identity. While we know hackers will keep trying to exploit account recovery tools, this verification method will ultimately be harder for hackers to abuse than traditional document-based identity verification.

Responsible Approach

Scammers are relentless and continuously evolve their tactics to evade detection. We’re just as determined to stay ahead of them and will keep building and testing new technical defenses to strengthen our detection and enforcement capabilities. We have vetted these measures through our robust privacy and risk review process and built important safeguards, like sending notifications to educate people about how they work, giving people controls and ensuring we delete people’s facial data as soon as it’s no longer needed. We want to help protect people and their accounts, and while the adversarial nature of this space means we won’t always get it right, we believe that facial recognition technology can help us be faster, more accurate and more effective. We’ll continue to discuss our ongoing investments in this area with regulators, policymakers and other experts.

By Meta | Created at 2024-10-29 21:19:15 | Updated at 2024-10-30 19:24:04

1 week ago

By Meta | Created at 2024-10-29 21:19:15 | Updated at 2024-10-30 19:24:04

1 week ago

/cdn.vox-cdn.com/uploads/chorus_asset/file/24016888/STK093_Google_01.jpg)