While it has long been a world-ending threat in science fiction, U.S. Air Force and Space Force officials see artificial intelligence (AI) playing important, if not critical roles in the command and control enterprise at the heart of America’s nuclear deterrent capabilities.

AI has the potential to help speed up decision making cycles and ensure that orders get where they need to go as fast and securely as possible. It could also be used to assist personnel charged with other duties from intelligence processing to managing maintenance and logistics. The same officials stress that humans will always need to be in or at least on the loop, and that a machine alone will never be in a position to decide to employ nuclear weapons.

A group of officers from the Air Force and Space Force talked about how AI could be used to support what is formally called the Nuclear Command, Control, and Communications (NC3) architecture during a panel discussion at the Air & Space Forces Association’s 2025 Warfare Symposium, at which TWZ was in attendance. The current NC3 enterprise consists of a wide array of communications and other systems on the surface, in the air, and in space designed to ensure that a U.S. nuclear strike can be carried out at any time regardless of the circumstances.

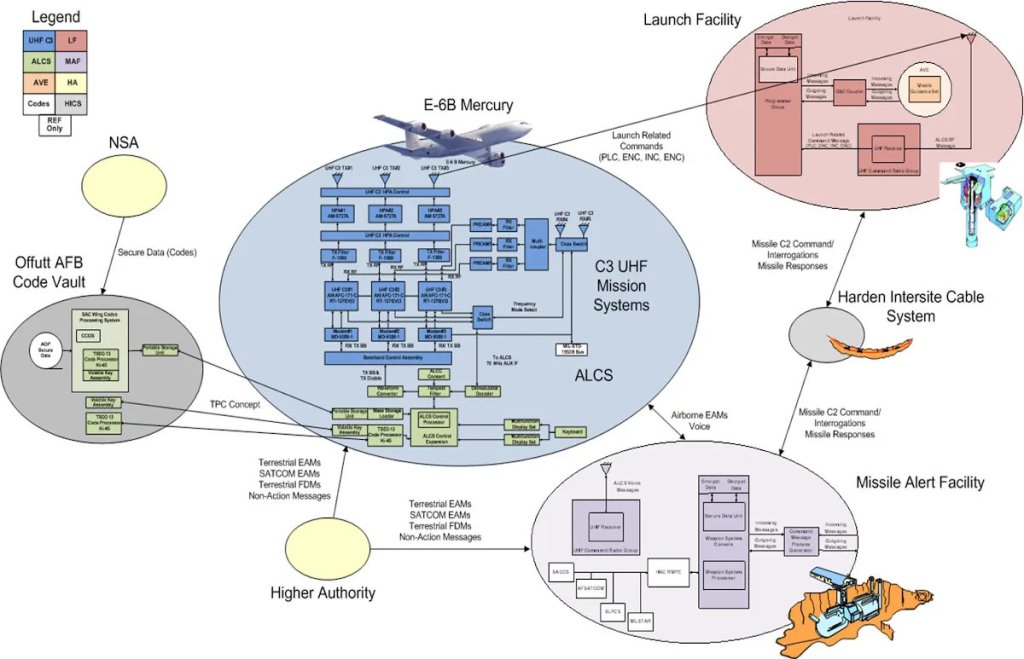

A now dated unclassified graphic showing just a portion of the elements that the NC3 enterprise consists of, giving a good sense of its scale and scope. USAF

A now dated unclassified graphic showing just a portion of the elements that the NC3 enterprise consists of, giving a good sense of its scale and scope. USAF “If we don’t think about AI, and we don’t consider AI, then we’re going to lose, and I’m not interested in losing,” Maj. Gen. Ty Neuman, Director of Strategic Plans, Programs and Requirements at Air Force Global Strike Command (AFGSC), said yesterday. “So we absolutely have to figure this out.”

“AI has to be part of what the next generation NC3 [architecture] is going to look like. We have to be smart about how we use that technology,” Neuman continued. “Certainly the speed is probably the most critical thing. There’s going to be so much data out there, and with digital architectures, resilient architectures, and things like that, we have to take advantage of the speed at which we can process data.”

Neuman also outlined a role for AI to help with secure communications.

“The way I would envision this actually being in the comm world, would be using AI to – if a message is being sent or a communication is being sent from the National Command Authority to a shooter, AI should be able to determine what is the fastest and most secure pathway for me to get that message from the decision maker to the shooter,” the general explained. “As a human operator on a comm system in today’s world, I will not have the ability to determine what is the most secure and safest pathway, because there’s going to be, you know, signals going in 100 different directions. Some may be compromised. Some may not be compromised. I will not be able to determine that, so AI has to be part of that.”

The National Command Authority is the mechanism through which the President of the United States would order a nuclear strike, a process you can read more about in detail here. America’s currently has a nuclear triad of “shooters” consisting of B-2 and B-52 bombers, silo-based Minuteman III intercontinental ballistic missiles (ICBM), and Ohio class ballistic missile submarines. Air Force F-15E Strike Eagle combat jets, as well as at least some F-35A Joint Strike Fighter and F-16 Viper fighters, can also carry B61 tactical nuclear bombs.

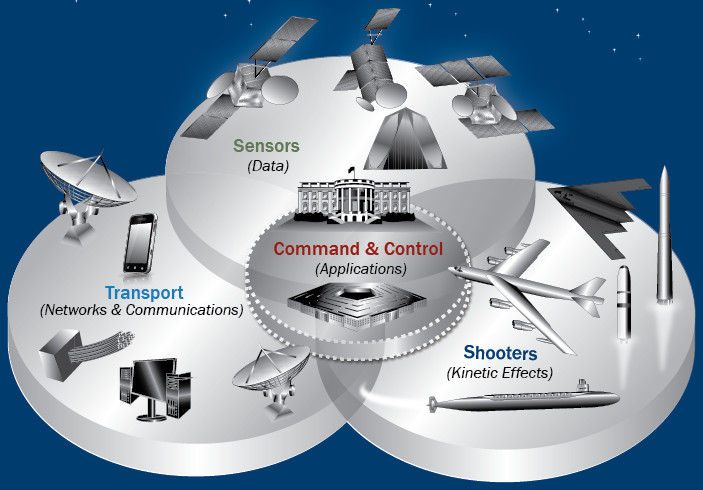

A graphic offering a very basic look at the elements of the NC3 architecture, as well as the “shooters” of the current nuclear triad. DOD

A graphic offering a very basic look at the elements of the NC3 architecture, as well as the “shooters” of the current nuclear triad. DOD AI could be valuable in the NC3 enterprise beyond helping with decision making and communications, as well.

“We can analyze historical data and identify trends, and those AI tools could be used in a predictive manner. We could use it on our systems to proactively manage just like our system maintenance, be able to plan the upgrades to the system, and reducing the risks to unexpected interruptions or disruptions,” Space Force Col. Rose, Deputy Director for Military Communications and Positioning, Navigation, and Timing (PNT) at Space Systems Command, another one of the panelists, said. “Additionally, the data or trends related to cybersecurity, or being able to see what our adversaries are up to, could also be useful for decision makers.”

Though made just in passing, Rose’s comment about cybersecurity offers a notable point about how AI could be useful for helping to defend networks like the NC3 architecture, both within the cyber domain and across the radiofrequency (RF) spectrum.

The nuclear decision-making space has always been one with short timetables, including when it comes to securely disseminating nuclear strike orders. For decades now, it has been understood that the President will have at best tens of minutes, if not substantially less, to explore available courses of action and pick one or more to execute once an incoming nuclear threat has been spotted and positively identified. Many of those courses of action would only be viable within certain time windows and any disruption in the decision-making process would have devastating consequences.

There are also already efforts to integrate AI-driven capabilities into other decision-making spaces, including at the tactical level, across the Department of Defense. AI tools are already being used to help monitor domestic airspace and processing intelligence, as well as assist with maintenance, logistics, and other sustainment-related functions.

At the same time, there have been concerns about the accuracy of the models that underpin existing AI-driven capabilities and the idea of automating anything to do with nuclear weapons is particularly sensitive. Science fiction and other ends of popular culture are also full of stories where turning over aspects of America’s nuclear deterrent arsenal to a machine leads an apocalypse or risks doing so. The 1983 movie WarGames and the Terminator franchise – starting with the eponymous film in 1984, but better emphasized by the opening scene to the 1991 sequel Terminator 2: Judgment Day 2 – are prime examples.

The panelists yesterday acknowledged the concerns about integrating AI into the NC3 architecture specifically.

“When we think about nuclear enterprise and our nuclear capabilities, as well as the assured comm[unications] that we absolutely have to have, we have to have a human in the loop. As good as AI is, as good as computer process and things like that could be, it’s really only as good as the data that is fed into it,” Maj. Gen. Neuman said. “Therefore, if the data is corrupted, then we have no way of actually determining whether the data or the output is actually there. So, therefore, we absolutely have to have the human in the loop there.”

“The human, the loop should really just be, you know, there to inform and make sure that the data that’s being transmitted is exactly right,” he also said.

“I think it’s important to push the boundaries of AI, and deliver innovative solutions that are reliable and trustworthy, but I also recognize that the integration of AI, specifically in NC3 systems, presents some challenges and risks,” Col. Rose added. “I think that with robust testing, validation, and implementing oversight mechanisms, I think we can find a way to mitigate some of those risks and challenges, and ultimately deliver AI systems that operate as they’re intended.”

“I would just foot stomp to those people that are maybe not as familiar with this mission space: while we do need everything we just talked about, there will always be a human making the decision on whether to employ this weapon, and that human will be the President United States,” Air Force Lt. Gen. Andrew Gebara, Deputy Chief of Staff for Strategic Deterrence and Nuclear Integration, who was also on yesterday’s panel, stressed. “So for those of you that are concerned out there, don’t be concerned about that. There will always be that human in the loop.”

It is worth noting that yesterday’s panel was hardly the first time U.S. military officials have publicly advocated for integrating AI into nuclear operations.

“We are also developing artificial intelligence or AI-enabled human-led decision support tools to ensure our leaders are able to respond to complex, time-sensitive scenarios,” Air Force Gen. Anthony Cotton, head of U.S. Strategic Command (STRACOM), said in a keynote address at the 2024 Department of Defense Intelligence Information System worldwide conference last October. “By processing vast amounts of data, providing actionable insights and enabling better informed and more timely decisions, AI will enhance our decision-making capabilities, but we must never allow artificial intelligence to make those decisions for us. Advanced systems can inform us faster and more efficiently, but we must always maintain a human decision in the loop.”

Air Force Gen. Anthony Cotton, head of U.S. Strategic Command (STRACOM). DOD Staff Sgt. Eugene Oliver

Air Force Gen. Anthony Cotton, head of U.S. Strategic Command (STRACOM). DOD Staff Sgt. Eugene OliverCotton elaborated further on this during a talk the Center for Strategic and International Studies (CSIS) think tank hosted in November 2024.

“If we think that United States Strategic Command can’t take advantage of artificial intelligence to preserve the terabytes of data that would otherwise hit the floor, and still do things the old fashioned way, as far as, not decision making, but planning efforts, efficiencies, then we might as well … move out of the beautiful building we have and just go into something that has rotary phones,” he said.

“I [would] much rather have an opportunity, if asked for the, you know, by the President – for example, the President says, ‘well, here’s what I want you to do’ – I [would] much rather say, ‘well, Mr. President, hold on. I’ll get back to you in a couple of hours, and we’ll talk about how we can execute that’,” Cotton continued. “You know, it would be much nicer for me to be able to kind of go, ‘Yes, Mr. President, give me a couple of minutes, and we’ll come back to you with some options.’ That’s what I’m talking about, right?”

“You know, in WarGames, it has this machine called the WOPR [War Operation Plan Response, pronounced ‘whopper’]. So, the WOPR actually was that AI machine that everyone is scared about. And guess what? We do not have, you know, a WOPR in STRATCOM headquarters. Nor would we ever have a WOPR in STRATCOM headquarters,” Cotton added. “That’s not what I’m talking about. What I’m talking about is, how do I, you know, how do I get and become efficient on ISR [intelligence, surveillance, and reconnaissance] products, you know? How do I get, you know, efficient on understanding what’s the status of my forces? You know, those are things that AI and machine learning can absolutely help us and really shave a lot of time off on being able to do those type of things.”

Whether or not the assurances like this, or those from Gebara, Neuman, and Rose yesterday, about a human always being in the loop will allay concerns about the use of AI in the NC3 architecture remains to be seen. What is clear is that this discussion is not going away any time soon.

Contact the author: [email protected]

By The War Zone | Created at 2025-03-07 00:31:11 | Updated at 2025-03-08 23:57:47

1 day ago

By The War Zone | Created at 2025-03-07 00:31:11 | Updated at 2025-03-08 23:57:47

1 day ago